RealityServer 5.1 is here and it has something a lot of users have been asking about. This release adds the new AI Denoising algorithm for fast and high quality denoising of your images using state of the art machine learning technology. You really need to try it to fully appreciate the performance benefits however we’ll show you a few images to give you a feeling for what it is capable of. We are also adding support for the new NVIDIA Volta architecture and as usual a range of other smaller enhancements.

Iray Photoreal mode has always had the issue that in quite a few use cases (e.g., complex architectural interiors) there can be some lingering noise in final renders that takes a significant amount of time to remove. While the overall image quality is very high this last little bit of noise can take from minutes to hours to remove depending on your scene. We finally have a solution for this problem.

The new AI Denoiser is based on an artificial intelligence technique known as machine learning. It has been trained to remove noise but not real detail using an extensive set of Iray scenes, many of which were provided by migenius customers. While training the denoiser is a time consuming process, this is performed by NVIDIA on a large number of GPUs so it comes pre-trained. After which you can run the denoiser at near real-time speeds on your own local GPU.

Unlike many traditional offline denoisers, the AI Denoiser is fast enough to be used interactively, typically less than 100ms to execute. During rendering some additional data needs to be stored, increasing memory requirements however the performance gains are substantial. Below is a constant time comparison.

Above you can see a crop of the bathroom rendering shown earlier in an area that exhibits more persistent noise. The top row is a conventional Iray rendering for various render times while the bottom row shows the same render time with the AI Denoiser activated. These renders were made on a TITAN X (Pascal) GPU. Even at the 30s end, the denoised image gives a much more useful result than even the 300s image without denoising, while at the other end, the 120s denoised image approaches the quality of the 1200s.

In practice the performance of the denoiser is going to depend on your content but for many use cases we are seeing a 5-10x speed up. Of course there are some trade-offs to get this performance, the denoising can introduce some smoothing and if there is insufficient information in the source image other artifacts, in most cases however these are preferable to the noise. Here is an example from one of our customers, Tapglance using their real-world content.

120 Second Render Time. Scene by John O’connor. Created in TapGlance.

120 Second Render Time. Scene by John O’connor. Created in TapGlance.

The AI Denoiser can be enabled for all rendering or only after a certain number of iterations have been computed which is useful for ensuring there is enough information for the algorithm to work with before applying it. Our pre-release customers have called this feature a game changer for producing final quality images in a fraction of the time. It’s also fully automatic so there are no complex settings to tune, just turn it on and go.

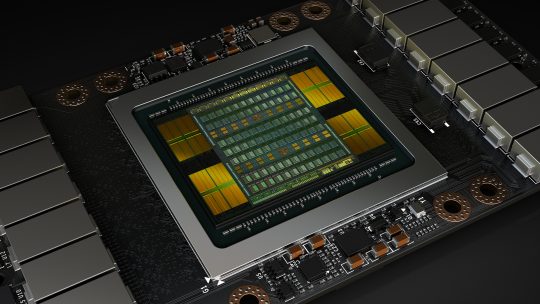

The NVIDIA Volta architecture is finally starting to appear, with cloud providers offering access to the Tesla V100 card. RealityServer 5.1 includes full support for Volta based cards and our early benchmarking is showing an impressive 50-60% improvement in performance over the previous generation Pascal architecture when comparing similar cards (for example comparing the Tesla P100 to the Tesla V100). Look out for more Volta based cards in the future and once we can get our hands on more we plan to do more extensive benchmarking.

Many users have asked us how to go about getting data up to RealityServer. We have usually just relied on this being done either by programmatically creating the scene data or uploading it through a side channel to the file system of the server running RealityServer. In RealityServer 5.0 we added the image_reset_from_base64 command and the import_scene_elements_from_string command was extended to allow importing any data type supported by the standard range of RealityServer importers. However there are still many cases this doesn’t cover and many applications require a general ability to upload.

This release adds a new built in upload server which you can optionally enable in your realityserver.conf to get content onto your server easily over HTTP/HTTPS. You can then use standard form based upload methods to get content onto your server. We plan to continue extending the upload server with new features for future releases such as automated unzipping.

In RealityServer 5.0 we added the server-side V8 engine and some basic canvas functionality came with that. This introduced some cool possibilities however it became evident pretty quickly that more functionality was needed. In RealityServer 5.1 we have extended the render_to_canvases command to return an array of canvases which can used directly in V8. This is great for automating rendering of multiple channels at once, perfect for automating Light Path Expression based compositing (spoiler alert, we are working on something in that area for an update).

We also found several use cases where resizing image canvases directly in RealityServer would be useful so we added a resize method to the Canvas object in V8.

This can be really useful if you need a smaller version of an image for some reason, for example producing thumbnails or passing to an image processing algorithm that works better at lower resolutions. The above (admittedly useless) example uses the resize method to prepare the canvas for conversion to ASCII art (this example is included in RealityServer 5.1 for your enjoyment).

All of this is great but we also found that once we had this functionality people wanted to be able to write the resulting canvases out to disk for later use or persistence. We have added a very basic fs module to V8 now so you can read and write binary data to disk and create directories. We plan to extend it in the future with additional functionality.

We have been shipping the Iray Viewer desktop application with RealityServer for some time and many users noted that it included a nice plugin based on the Assimp library which allows it to import (and export) many file formats. Some of you even found that you could copy this plugin out of Iray Viewer into your RealityServer installation and use it there. Enough people were doing this that we thought why not include it as a standard feature.

In the process we reworked things a little to make the plugin fully compatible with RealityServer and also updated the version of Assimp being used to 4.0.1. We enabled all formats supported by Assimp for import and export except for OBJ which we disabled in favour of our existing OBJ importer which is more complete. Your millage with different formats will vary significantly in terms of what gets imported. Some will do reasonable materials and geometry, others may just give you geometry. We still recommend building your system around our .mi file format but there are many use cases where this plugin can be helpful.

If you make use of the Assimp plugin we’d encourage you to support the project with a donation as we have done or by contributing. It is being actively developed and details of how to help support the project are available on their Github page.

Import formats:

Export Formats:

RealityServer 5.1 introduces a fairly major change to the way that MDL based materials are handled in the JSON-RPC API. These changes will unfortunately break some existing applications however they are required to ensure future compatibility with new versions of Iray and MDL and do also make things a lot clearer in terms of how MDL types are handled.

First up, we have added a new set of commands to get and set MDL material/function arguments. These new commands allow for getting and setting of all types of MDL arguments (not just those that had a natural mapping to RealityServer types as was the case before) and easy connection of MDL functions to arguments. The following commands have been added:

Refer to the command documentation for more details. These replace and deprecate the usage of element_get_attribute, element_get_attributes, element_set_attribute and element_set_attributes with MDL arguments. If your application is using these commands you will need to update it to use these new versions.

The mdl_get_definition command has been expanded to support obtaining the definitions of all MDL types: Module, Material_definition, Function_definition as well as custom types defined in modules. Additionally, when getting the definition of Material_instance‘s or Function_call‘s the command can return the definition details rather than just the name, potentially saving an additional command call. Additionally, the mdl_name argument of this command has been deprecated and replaced with element_name to match the other MDL commands.

You now also get all of the MDL annotation details when using these commands so you can use it to build more attractive user interfaces or validate soft and hard limits based on the various UI annotations available within many MDL files.

The following MDL commands have been deprecated as their functionality is now covered by the new get/set commands: mdl_attach_function_to_argument, mdl_get_argument_attachment and mdl_is_argument_attached. Also the following commands have been deprecated in regards to their usage with MDL: element_attribute_exists, element_get_attribute, element_get_attribute_type, element_get_attributes, element_set_attribute and element_set_attributes. Support for getting/setting MDL arguments with these commands will be removed in future versions.

When using the element_get_attribute_type command it now returns different results when getting the types of MDL arguments. Previously it returned the RealityServer data type name, now it returns the MDL type name.

The element_get_type command returns different names for MDL elements than in RealityServer 5.0. The new type names are: Complied_material, Function_call, Function_definition, Material_definition, Material_instance and Module. Previously these had Mdl_ prefixes.

We have started encountering quite a few cases where you might want to get the image space coordinates of a point in 3D world space. For example, let’s say you want to draw a client side widget at the location of an object and overlay it on the rendered image. We have added the new project_point command for this purpose. It takes the scene name and a 3D point and will return the 2D image space location of that point or an error if the point does not project to the screen.

We are working on some great new features for RealityServer 5.2 but as always would love to hear more from our users on what you would like to see. If you want to know more about RealityServer, please contact us.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.