RealityServer 5.2 is here and adds some great functionality. Hugely expanded glTF 2.0 importer support, wireframe rendering, lightmap rendering, section plane capping, MDL 1.4 support, UDIM support and many more features have been added along with many fixes and smaller enhancements based on extensive customer feedback. In this post we will run through some of the most interesting functionality and how it can help you build your applications.

Previous versions of RealityServer have already supported the glTF file format however it only imported very basic materials. In this release we have significantly expanded support for glTF 2.0 to include full support for its built in PBR material model, embedded texture data, various extensions and many other features.

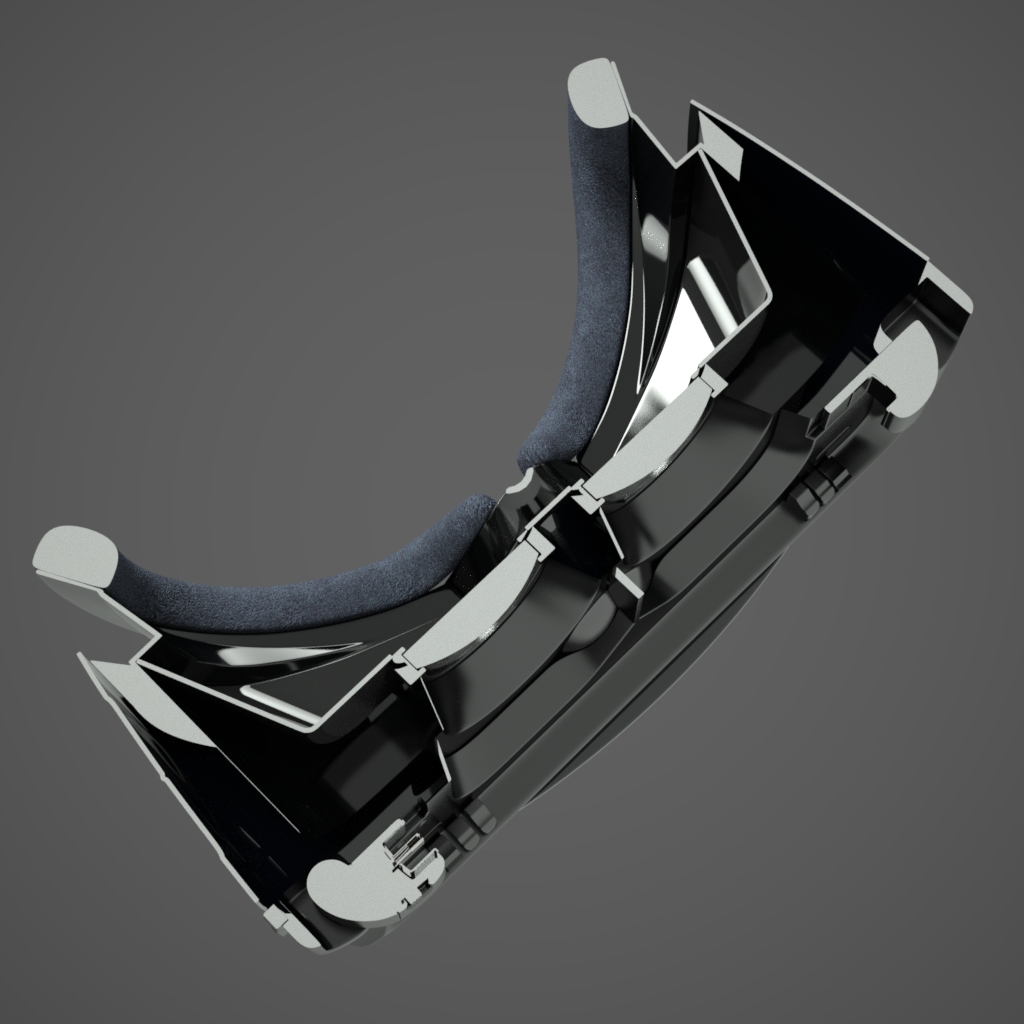

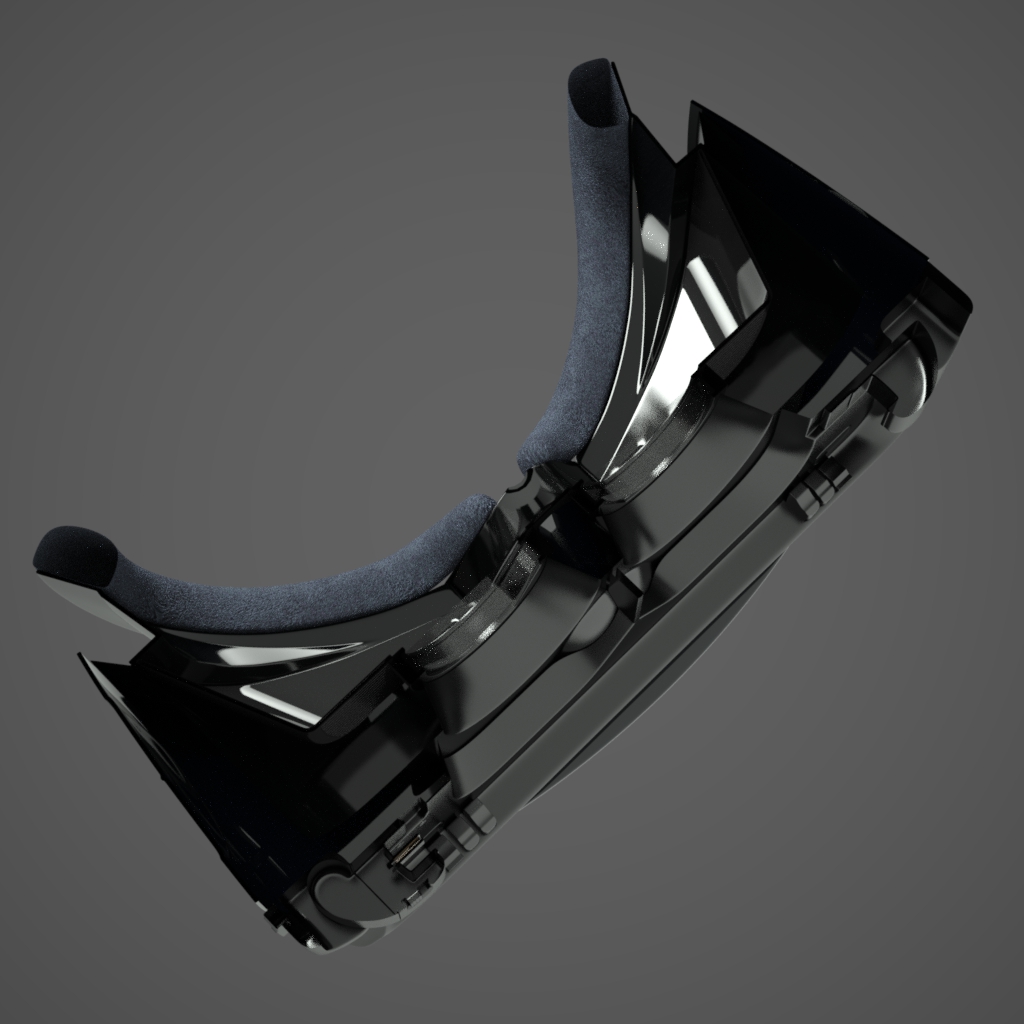

The image to the right has been rendered in RealityServer and the model was directly downloaded from SketchFab as a glTF 2.0 file. It uses many of the recently implemented features and in previous releases it would have basically just been a grey model. As can be seen here you can achieve very good quality results directly from glTF 2.0 assets with the new importer, making it a viable storage format for RealityServer models.

A few small caveats with the new importer. We don’t currently support the KHR_texture_transform extension, animation, the doubleSided material property, vertex colours or orthographic cameras. We will implement additional functionality as we start to hear more from our customers on what features they would most like to see. As a side note, when we added support for embedded textures to the glTF importer this also had the nice side effect of adding it to FBX import as well.

HelmetConcept by floor1o used under CC BY 4.0 (Click to Zoom)

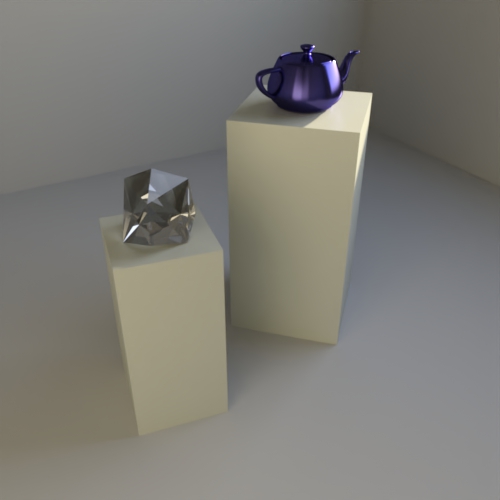

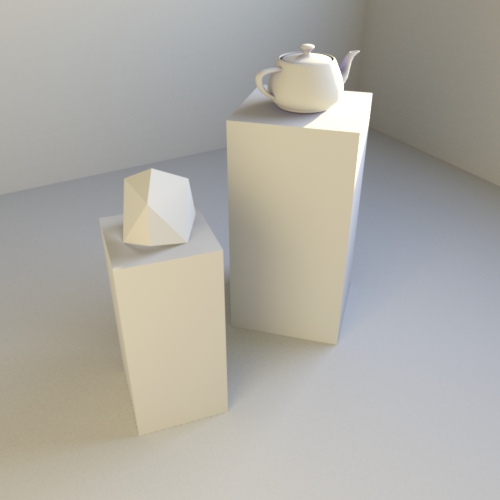

We’ve had quite a few requests for lightmap baking in RealityServer from customers who are combining RealityServer with real-time rendering systems such as Unreal Engine, Unity and WebGL. Even though many of these systems have their own light baking solutions, they often lack the speed and accuracy of the Iray rendering engine built into RealityServer. Here is a quick example showing a normal rendering on the left and baked lightmap textures applied to the emission channel of the material for visualisation on the right.

Conventional Image with Iray Photoreal

Lightmapped Irradiance Rendering

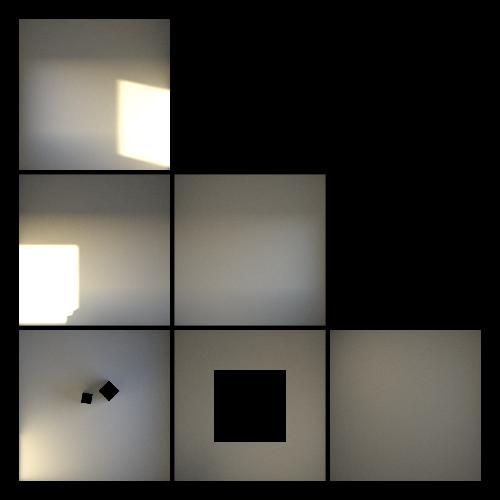

Baking to lightmaps in RealityServer is implemented as a new rendering mode. You select it just like selecting iray or irt rendering modes, however you provide it with additional render context options to tell it what objects in the scene need to be baked. After running a lightap render you will obtain images like this.

Lightmap for Walls

Lightmap for Objects

These images represent the irradiance at the given location on the surface, in other words, the light arriving at the surface (including its colour). In a real-time rendering engine these images can then replace the diffuse lighting that would normally need to be computed at runtime. Here is a small example application created with PlayCanvas to make use of the above lightmaps in WebGL. You can orbit around by clicking and dragging.

The lighting in this scene is pre-computed from RealityServer and is using the physically based daylight model. All light is coming through the window opening and much of the lighting is indirect. There are no actual lights in the scene. In PlayCanvas materials have a specific lightmap property where you can assign textures. It also fully supports high dynamic range lightmaps which you can upload as .hdr files, during upload they are converted to RGBM based PNG files. Note that if using PlayCanvas with high dynamic range lightmaps you will want to turn off mipmapping on those textures to avoid banding artifacts.

Details on using the lightmap renderer is too in depth for this post, however you can find it fully documented in your RealityServer Document Center by going to RealityServer Features → Renderers → Lightmap or check it out online here.

While we tend to focus on photorealism here at migenius, there are a lot of times where you might want to see the underlying geometry of your 3D model, or just need a very fast rendering mode to position the camera or the objects in your scene. Users would often switch to the normal canvas of Iray for this purpose but that is not ideal, so in RealityServer 5.2 we are introducing the Wireframe rendering mode.

The wireframe rendering mode is OpenGL based and renders the triangle outlines of the geometry in your model. You can control the colour of the wireframe, fill colour of the triangles, the background colour and also thickness of the lines and the number of samples to take for antialiasing. As can be seen in the image below you can produce high quality wireframe images suitable not just for interactive use.

Because there is no lighting and all of the rendering is using rasterization it is also extremely fast. This makes the wireframe renderer also suitable for interactive use, similar to how you would use the wireframe mode in the viewport of any 3D content creation tool.

We’ve implemented the renderer so that it uses headless OpenGL. This means you don’t have to have an X-server running if you are deploying under Linux which greatly simplifies the configuration. For more details on all of the options available check out thhe documentation in your RealityServer Document Center by going to RealityServer Features → Renderers → Wireframe or check it out online here.

For quite some time Iray has had functionality for doing some basic mesh processing operations however this was never exposed in RealityServer. In this release we are adding the following commands (there are also plural versions of the commands which take multiple meshes as input, they simply have the suffix meshes instead of mesh).

This command will weld vertices of a mesh that are within a certain tolerance and the meshes variant can be used to join multiple meshes together into one (with or without tolerance based vertex welding). It can be very useful for reducing object counts in scenes with a lot of meshes and the vertex welding part is useful for joining vertices to later allow simplification with the geometry_simplify_mesh command.

If you have a Polygon_mesh object that you would like to ensure is all triangles this command will take the arbitrary polygon mesh and convert all of the polygons into triangles. This is usually for fairly specific reasons and if you need it you probably already know why.

Here we use a mesh decimation algorithm to simplify a mesh so that it can be represented with fewer triangles. The parameters for this are fairly complex and you’ll need to refer to the command documentation as well as the Iray documentation for IGeometry_simplfier. The simplifier is not really comparable to the current state of the art in the field however it can be useful for quite a few simplification needs none the less. Note that there is no plural version of this command due to a bug in Iray preventing multiple meshes from being simplified at once.

RealityServer 5.2 ships with Iray 2018.0.2 build 302800.7019. We will be updating to Iray 2018.1.2 in the near future however this wasn’t available in time for our initial release. In addition to the features described below, there were significant improvements to X-rite AxF support and MDL 1.4 support was added, checkout the neurayrelnotes.pdf file included with your RealityServer installation for full details. Here is a quick rundown of what we think are the most relevant changes in Iray 2018 which are now available in RealityServer.

Section plane capping is a new feature which allows you to fill the volume of geometry which has been cut away by a section plane object with a solid diffuse colour. This is great for diagrams and other illustrations where you don’t really want to see the edges and internals of the geometric volumes.

Note that for this feature to work your model must have correct normals. Without this Iray is unable to correctly determine when rays move into and out of the volume of the object and so will produce incorrect results. For models originating from solid modelling systems this should already be the case, others may require manual corrections.

Two new attributes have been made available on the Options element to control this feature. They are section_caps_enabled and section_caps_color. These allow you enable and disable the feature and control the colour of the filled caps. The feature can be combined with light clipping however you’ll probably usually want to disable that.

Section Capping Enabled

Section Capping Disabled

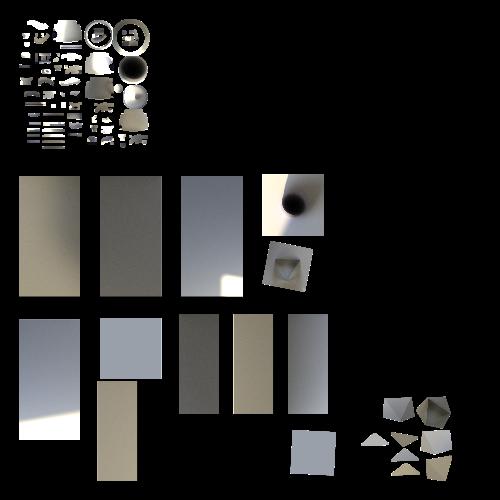

If you are not already familiar with UDIM then the image to the right might not mean much to you. However if you have been waiting for UDIM support you’ll know exactly what you are looking at. As part of the added support for MDL 1.4, UDIM support has now also been included. Basically it lets you use a set of texture files, potentially of varying resolution on an object with a single UV space. Tools such as Substance Designer are now supporting this technology as well.

So why would we want this? Basically it allows you to have different texture resolutions on different parts of the object. This is extremely useful when you might need more texture detail in one part of an object or the UV coordinates cover a greater area in one part. The image here doesn’t really exploit this but there is a wealth of content out there that makes heavy use of UDIM. A complete description of UDIM is beyond the scope of this post however some quick Google-Fu will find you some useful resources.

Refer to the MDL 1.4 Specification section 2.3 on Texture Files for more details on how to specify UDIM textures. In terms of RealityServer, basically it means using a special filename when loading up textures. If using the standard UDIM convention, that would be texture_<UDIM>.png or something similar. The <UDIM> part will then be replaced at runtime with the relevant index based on where in UV space you currently are. The entire UDIM set of textures is treated as a single image event though it references multiple files, making it much simpler to handle.

Of course, as usual we have incorporated a lot of bug fixes and other more minor improvements. Get a complete list of the changes in the relnotes.txt file included in the root directory of your RealityServer installation. Here are a few additional highlights from the smaller improvements.

You might have noticed the teapot in our lightmap renderer section above. This was made with the new generate_teapot command. It’s always useful to have some way to easily make non-trivial geometry for testing and now you can do it in a single command. It uses the original Bézier patch data for the teapot and tessellates this inside a server-side V8 command so you can also checkout the source code as a nice new example command.

The command takes a parameter allowing you to specify the level of subdivision so you can make more or less densely tessellated versions depending on your requirements. The command also uses the new mesh welding command to join the individual teapot parts into a single mesh object. Correct UV coordinates are normals are also computed making the teapot a really useful test object.

We added a get_gpu_statistics command which gives you back the free, total and used memory as well as utilisation level of all of the GPUs in the system. We’ve had quite a few requests for this information and previously customers had to make their own little servers to get that information out, now it’s all built in. When you run the command you’ll get something like this back.

{

"id": 1,

"jsonrpc": "2.0",

"result": [

{

"free_memory": 3337371648,

"total_memory": 6442450944,

"used_memory": 3105079296,

"utilization": 0

}

]

}

An array is returned since you may have multiple GPUs. The array order and indices are based on those from Iray inside RealityServer. This information could also be helpful in driving load balancers and other deployment management tools. You can also use it to quickly determine if the GPUs are being utilised for rendering without having to login to the server and use the traditional nvidia-smi command.

The element_set_attribute command can now set structure attributes. This is necessary if you want to set the approximation settings for tessellation used in displacement, subdivision surfaces or freeform surfaces.

Just like on the import commands the export_scene and export_scene_elements commands now support an export_options parameter so you can pass exporter options to the exporter. This is most relevant for the .mi export where you can use the mi_write_binary_vectors_limit option to output vector data as binary in .mi files, saving a lot of disk space.

If you don’t have your release notification yet reach out and we will make sure you get the details for downloading the new version. As usual, the release notes and Iray releases notes contain a lot of helpful information in terms of finding out everything that has changed and checking on whether a bug you were interested in has been fixed. As always get in touch if you want to learn more.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.