As any retailer or product marketer knows, it is almost impossible to sell a product without a photograph. The prospective purchaser gains reassurance and essential detail from these images regarding useability, suitability, attractiveness, size, functionality, etc., that cannot be communicated by words alone. And these images have been proven to do the job significantly better if they are of high resolution and show the product in context, enabling the buyer to see exactly how their specific product will look in their own environment.

This image is computer-generated – but could you tell the difference?

For years, professional photographers have been employed to produce these images in studios in which the sets, lighting and finishing systems are constantly reconfigured to show each product at its best. This has always been expensive, but until recently there has been no alternative. Consider the cost of professional photographers, studio time, constant reconfiguration, and the logistics necessary to bring pristine product stock to and from the manufacturer’s warehouse, in all its available options and variants, and without damaging it. In addition to those direct costs, the sheer time needed to plan, photograph and process the images, then pass these through final selection and delivery requires weeks, if not months, of programme time.

Image that would require an army of set designers to photograph in the real world (Courtesy of Floorplanner.com)

The problem is that, in today’s fast-moving markets, physical photography is simply not financially or logistically feasible in many cases. However, computer generated images of the highest quality and realism can now be produced and distributed faster than physical photographs, enabling orders to be taken earlier and in many cases even before the products themselves have been manufactured. This compresses the ‘order to cash’ process, while enabling game-changing agility in meeting consumer demand for constantly evolving fashions and designs.

Computer visualisation has many advantages over physical photography as a source of imagery. The necessary 3D model detail can easily be derived from prototyping and production tooling files; garment models can quickly be created from garment simulation systems (such as, Optitex or Marvelous Designer) using only 2D pattern files. High quality, detailed images can be produced well before the production phase to assist marketing teams with advanced promotion and at costs and timings comparable with, or better than, physical photography.

Use of garment simulation systems enables photorealistic draping of fabric.

However, the big problem with standard computer visualisation is that in order to generate imagery of sufficient detail and quality to convince the prospective purchaser that your product is the best choice requires the input of a team of 3D specialists who need endlessly to tweak, test and edit a huge variety of system parameters, adding substantial time and cost. This army of specialists needs to cover many complex areas, including rendering algorithms, material, colour and light source definitions, shadow, level of detail (LOD), camera and global illumination parameters, render system management, image compositing, to list just a few.

And the real frustration here, is that this same process needs to be largely repeated for almost every image and, at the end of it all, the imagery will still look either computer generated or, even if you do end up with impressive imagery, you still cannot be sure that it provides an accurate representation of what the item will look like when it has been purchased and delivered. This issue of getting what you are expecting is particularly important for products chosen on the basis of personal taste, such as, soft furnishings, garments, footwear, fabrics, paint finishes, coverings, bedding, etc.

Now there is another option available to you – Interactive, Physically Based Rendering. Physically based rendering was born, in part, out of the requirements of the US Department of Energy, which provided funding in the early eighties to the Lawrence Berkeley National Laboratory (LBNL) for research into new ways to use computers to simulate the transport of energy within complex spaces. It was here that Greg Ward implemented the Radiance Synthetic Imaging System. Evidence of this seminal work is still seen in almost every modern, physically based rendering tool. Radiance continues to be actively developed today, being used primarily by researchers and industry professionals for lighting applications.

With significant contributions from various commercial groups over this same period, we now have not only algorithms that will accurately calculate the precise distribution of light and colour within complex environments, combining natural and artificial light sources with complex surface and material definitions, but these calculations can also be completed in real-time or near real-time.

In short, we now have a technology platform that will take standard 3D files, apply comprehensive material definitions, insert a fixed or custom lighting system and a 3D scene file for context, and produce a physically accurate, high-definition, photographic image file, without the need for any 3D specialist input and all occurring within seconds.

Interactive, physically based rendering provides companies with the photographic accuracy of a professional studio and early availability of imagery for their entire product range, and all for a fraction of the cost needed by previous image production systems.

To highlight the important difference here with the nonphysical based computer visualisation method, physically based 3D scenes parallel the real world; once the materials and items have been selected and placed in the scene, and the lighting system set, then just as with a real photo studio, you cannot tweak or edit any of the material parameters or alter the way the light will travel through your scene as such changes would compromise the physically based rendering algorithm. If there is a need to alter either materials or the lighting configuration then, just as would happen in a photographic studio in the real world, you would actually need to change the material or reposition/modify the luminaire.

As in a real-world studio, physically based rendering provides the same facility for instantaneous control all of your camera settings, including focal length, aperture, depth of field, ISO setting, exposure, etc., although default and automatic settings are also provided.

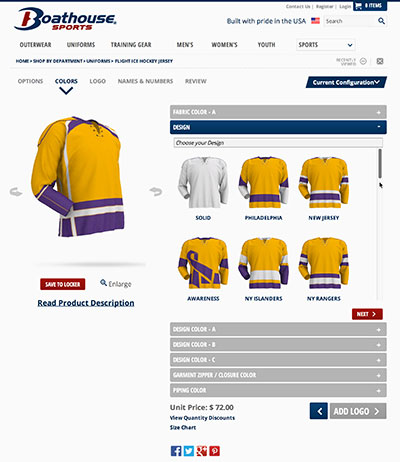

On-line retailers selling ‘mass customisation’ can benefit in particular from the ability of interactive, physically based rendering to automate the production of very large amounts of accurate, high detail, photographic quality imagery, either off-line as a batch render, or on-line where prospective purchasers can custom configure a very wide variety of product options and view their precise selection both live and in stunning detail, prior to order placement. With the live system, not only can the purchaser change product details, but they can also interactively view their chosen product in different contexts and lighting conditions (e.g. night or daytime). Only this physically based rendering platform can provide this capability, and do this without the need for any specialist input, so that the cost of image production is highly competitive and product updates can be accommodated a fraction of the cost and time of other methods.

Mass customisation can require astronomical numbers of images, made possible by interactive physically based rendering

migenius’ mission is to enable everyone to use photorealistic 3D rendering to communicate and evaluate their ideas and products. Our RealityServer platform for interactive, physically based renderingis a proven technology, already used by some of the world’s most recognised brands, such as, The North Face, Reebok, Wild Things, Takemoto, Timberland, Vans, Boathouse Sports, Thermo Scientific, Floorplanner, and Bloom Unit. Importantly, RealityServer customers have the choice of licensing the platform on either a fixed fee basis, or on a utility model where they only pay while they are rendering images. When combined with the option to run RealityServer on “cloud” infrastructure (the subject of a future article), then the cost of offering interactive, physically based rendering becomes truly scalable and without the need for the usual huge, up front investments in technology.

To find out more about how we can help your marketing and communication stay in front of the competition, contact us by phone or email and we’d be happy to discuss your requirements.

Chris has over 20 years of direct experience and deep knowledge of 3D project management systems and high speed physical based rendering technologies. As former CEO and co-founder of Luminova, a specialist 3D visualisation and application development group, some 3,000 projects were successfully delivered to many of the world’s largest companies, over a 13 year period. Since 2011 Chris has been a partner and co-founder of migenius, the home of the cloud based RealityServer solution platform.