In this article I am going to show you how add light sources to your RealityServer scene using the V8 server-side JavaScript API. You will learn how to add several different types of lights, including a photometric light using an IES data file, an area light, a spot light and daylight. This will be a very simple example but will give you all of the pieces you need to programmatically add lighting to your scene. You can expand on the concepts shown here to make different types of lighting very easily.

This article is an updated version of this earlier post which used the JSON-RPC API directly to create lighting. Of course that approach still works but these days using the V8 API is a much easier way to manipulate your scene. So here you will learn how to access the same functionality from within V8 using its helper classes to make it much simpler.

You’ll learn how to create the scene above purely within a V8 command, including the geometry, lighting, a section plane to cut away the wall and finally loading some pre-existing objects from disk and placing them in the scene with some different materials. As can be seen above you will have the option to turn dayligthing on or off as well.

If you haven’t already done so, I highly recommend reading the article on Creating an Empty Scene with Server-side V8. This article will be assuming you are familiar with the information there. To follow along you will need an environment from which to edit your command as well as a running instance of RealityServer itself. I recommend Postman for sending commands, however any tool that can send HTTP POST requests will do. It can be useful to enable developer mode in the V8 configuration in your realityserver.conf file so that your command will re-load whenever you change your script.

This article also assumes you are running RealityServer 6.0 or later and uses some features introduced in that version. Even so the information can be easily adapted to earlier versions if needed.

The command we will create for this article uses quite a few different helper classes. After creating your command file, for example tutorial_lights.js and saving it in your V8 commands directory (by default v8/commands) let’s include the following helper classes.

const Scene = require('Scene');

const Camera = require('Camera');

const Group = require('Group');

const Instance = require('Instance');

const Options = require('Options');

const Light = require('Light');

const Lightprofile = require('Lightprofile');

const Polygon_mesh = require('Polygon_mesh');

const Section_object = require('Section_object');

const Mdl_function_call = require('Mdl_function_call');

const Mdl_material_instance = require('Mdl_material_instance');

This gives you a feeling for some of the topics we’ll be exploring here. There are a few that are not strictly required to get lighting working (such as the section plane) but since they were used in the original article I’ll show you how to use them.

The command definition will be very simple with only two arguments, one to give the name of the scene we want to create and another to tell it whether we want the daylighting system turned on. We need the name of the scene so we can pass it to future commands such as might be needed to render or further manipulate the scene.

module.exports.command = {

name: 'tutorial_lights',

description: 'Creates a scene with multiple artificial light sources.',

groups: ['tutorial', 'javascript'],

arguments: {

scene_name: {

description: 'The name of the scene the will be created.',

type: 'String'

},

daylight: {

description: 'If true then daylighting will be turned on.',

type: 'Boolean'

}

},

execute: function({scene_name, daylight}) {

...

}

};

All of the remaining code I will show is inside the body of the execute function. I’ll progressively add to it building up the scene as we go.

Before populating it we need to create all of the basic elements for the scene. Since this was covered in the Creating and Empty Scene article we’ll gloss over the details here. Here is the basic setup in code.

// Create all of the needed database elements

const scene = new Scene(scene_name, true);

const options = new Options(`${scene_name}_opt`, true);

const camera = new Camera(`${scene_name}_cam`, true);

const camera_instance = new Instance(`${scene_name}_cam_inst`, true);

const rootgroup = new Group(`${scene_name}_root`, true);

// Attach the camera to its instance and the instance to the rootgroup

camera_instance.item = camera;

rootgroup.attach(camera_instance);

// Set the needed elements onto the scene

scene.options = options;

scene.camera_instance = camera_instance;

scene.root_group = rootgroup;

// Import MDL modules that will be required later

Scene.import_elements('${shader}/base.mdl');

Scene.import_elements('${shader}/nvidia/core_definitions.mdl');

Something extra we’ve added here are the last two lines where we import some MDL modules which we will need later when we create our light sources and materials for the scene. The above creates an empty scene with the needed scene, options, camera, camera instance and rootgroup. This can already be rendered but will only give a black image so nothing to see yet.

We made a camera in the last section but by default it just sits at the origin pointing down the negative Z axis. Not terribly useful so we’ll get it in the right place.

// Set the camera instance transform for the view

camera_instance.matrix = new RS.Math.Matrix4x4([

1.0, 0.0, 0.0, 0.0,

0.0, 0.0, -1.0, 0.0,

0.0, 1.0, 0.0, 0.0,

-5.0, -1.5, -9.55, 1.0

]);

// Select the photographic tonemapping operator

camera.attributes.set('tm_tonemapper', 'mia_exposure_photographic', 'String');

camera.attributes.set('tm_enable_tonemapper', true, 'Boolean');

// Provide all settings for the operator

camera.attributes.set('mip_cm2_factor', 1.0, 'Float32');

camera.attributes.set('mip_whitepoint', new RS.Math.Color(1,1,1), 'Color');

camera.attributes.set('mip_film_iso', 100.0, 'Float32');

camera.attributes.set('mip_camera_shutter', 10.0, 'Float32');

camera.attributes.set('mip_f_number', 2.0, 'Float32');

camera.attributes.set('mip_vignetting', 0.0, 'Float32');

camera.attributes.set('mip_crush_blacks', 0.2, 'Float32');

camera.attributes.set('mip_burn_highlights', 0.25, 'Float32');

camera.attributes.set('mip_burn_highlights_per_component', true, 'Boolean');

camera.attributes.set('mip_burn_highlights_max_component', false, 'Boolean');

camera.attributes.set('mip_saturation', 1.0, 'Float32');

camera.attributes.set('mip_gamma', 2.2, 'Float32');

// Set some basic camera parameters for a perspective camera

camera.resolution_x = 1280;

camera.resolution_y = 400;

camera.aspect = camera.resolution_x / camera.resolution_y;

We create a transformation matrix to rotate the camera and reposition it looking into where our scene will be. This can also be created by multiplying individual matrices as we will see later but here we just create the final matrix directly. We then set a bunch of attributes on the camera to configure the tone-mapping parameters. This will control the brightness and contrast but the parameters are like those you see in a regular camera (e.g., f-number, shutter). Finally we set a resolution and then the image aspect ratio. The tone-mapping above is setup for the scene when daylighting is turned off.

Daylighting is controlled through the environment function. You can see more details on this in the article Environment Lighting with Server-side V8. There is a special built in environment function for daylighting called sun_and_sky which we will use here.

// Create the sun and sky environment with physically based parameters

if (daylight) {

let environment_function = Mdl_function_call.create(`${scene.name}_environment_function`,

'mdl::base::sun_and_sky', {

on: true,

multiplier: 0.10132,

rgb_unit_conversion: { r: 1.0, g: 1.0, b: 1.0 },

haze: 0.5,

redblueshift: 0.0,

saturation: 1.0,

horizon_height: 0.001,

horizon_blur: 0.1,

ground_color: { r: 0.4, g: 0.4, b: 0.4 },

night_color: { r: 0.0, g: 0.0, b: 0.0 },

sun_direction: { x: -0.15, y: -0.65, z: 0.9 },

sun_disk_intensity: 1.0,

sun_disk_scale: 1.0,

sun_glow_intensity: 1.0,

y_is_up: false,

flags: 0,

physically_scaled_sun: true

}

);

// Set the environment_function attribute on the options to actually use the environment

options.attributes.set('environment_function', environment_function.name, 'Ref');

// Set environment intensity to 1.0 as we want to control with the sun sky system

options.attributes.set('environment_function_intensity', 1.0, 'Float32');

// Adjust the previously setup tone-mapper

camera.attributes.set('mip_camera_shutter', 750.0, 'Float32');

}

We create the sun_and_sky function with the parameters we want and then set an attribute on our scenes options to use it. There is also a multiplier we can set to change the overall intensity of the environment. With the exception of the sun_direction parameter, the above parameters give a physically correct daylight system. In this case we’ve chosen the sun direction by hand to work for this scene. You can also drive this from date and time by calling out to the RS.set_sun_position command which computes this vector based on geographic location, date and time.

Finally we adjust the camera shutter if daylighting is turned on since the scene will be much brighter in that case. You may notice in the image at the start of this article that the artificial lighting is still just barely visible. This is because the brightness of the daylight is an order of magnitude brighter. It is therefore required to adjust the tone-mapping. There is however also a command you can call out to, RS.camera_auto_exposure to determine the correct settings for you. This works in a similar way to a regular cameras automatic exposure.

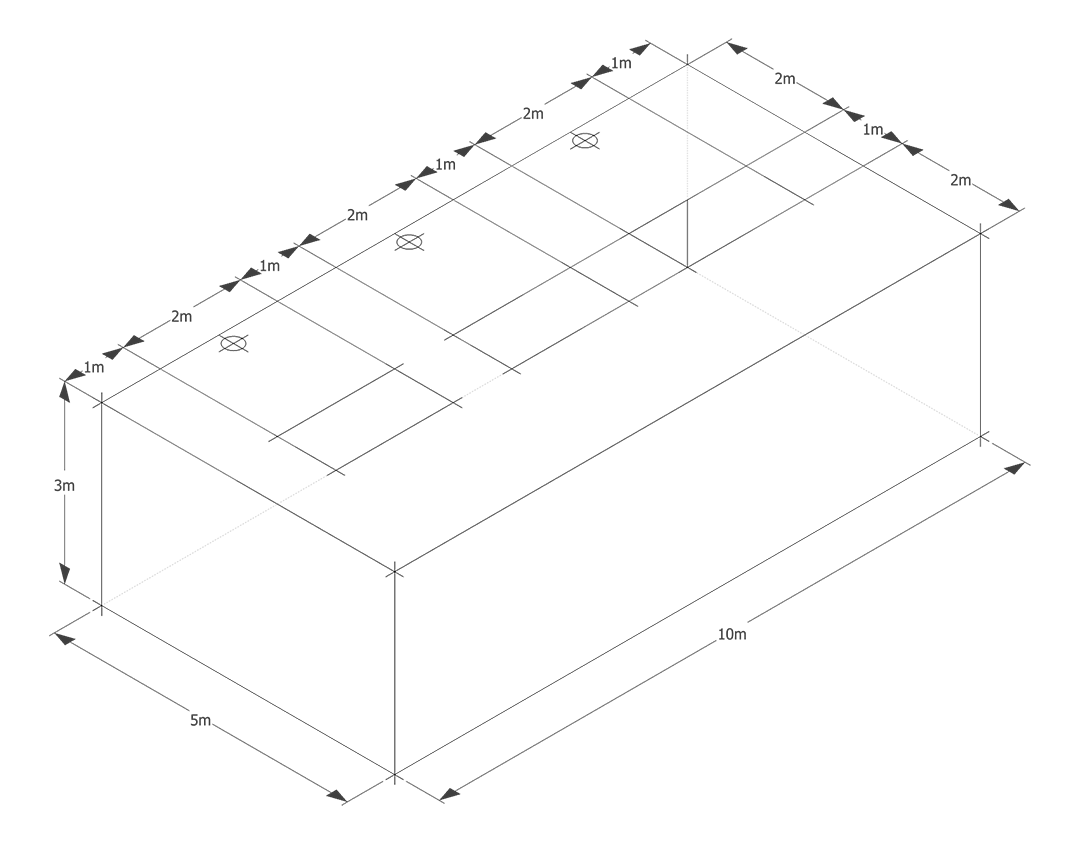

The lighting is coming soon, I promise, but first we need something to actually light up so we will create a room with some holes in the ceiling to let the daylighting in if we turn it on. We will use some new functionality for this which allows you to create a binary mesh representation for the room geometry and ask RealityServer to build it for you. Here is the room we want to make.

Based on this layout we will manually create the vertex data and polygons needed and then use the Polygon_mesh.create method to generate the geometry,

// Binary mesh representation of the room

let room_mesh = {

type: 'Polygon_mesh',

tagged: false,

points: new Binary(new Float32Array([

0, 0, 0, 0, 0, 3, 0, 5, 0, 0, 5, 3,

10, 0, 0, 10, 0, 3, 10, 5, 0, 10, 5, 3,

0, 2, 3, 1, 2, 3, 1, 3, 3, 0, 3, 3,

3, 2, 3, 4, 2, 3, 4, 3, 3, 3, 3, 3,

6, 2, 3, 7, 2, 3, 7, 3, 3, 6, 3, 3,

9, 2, 3, 10, 2, 3, 10, 3, 3, 9, 3, 3

]).buffer),

normals: new Binary(new Float32Array([

-1, 0, 0, 0, 1, 0,

1, 0, 0, 0, 0, -1,

0, 0, 1, 0, -1, 0

]).buffer),

uvs: [

new Binary(new Float32Array([

0, 0, 0, 3, 5, 0, 5, 3,

0, 0, 0, 3, 10, 0, 10, 3,

10, 0, 0, 0, 0, 5, 10, 5,

0, 2, 0, 3, 1, 2, 1, 3,

3, 2, 3, 3, 4, 2, 4, 3,

6, 2, 6, 3, 7, 2, 7, 3,

9, 2, 9, 3, 10, 2, 10, 3

]).buffer)

],

uv_sizes: [ 2 ],

corners: new Binary(new Uint32Array([

4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4

]).buffer),

offsets: new Binary(new Uint32Array([

0, 4, 8, 12, 16, 20, 24, 28, 32, 36, 40

]).buffer),

point_indices: new Binary(new Uint32Array([

0, 2, 3, 1, 2, 6, 7, 3, 6, 4, 5, 7, 4, 0, 2, 6,

0, 4, 5, 1, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19,

20, 21, 22, 23, 1, 5, 21, 8, 11, 22, 7, 3

]).buffer),

normal_indices: new Binary(new Uint32Array([

0, 0, 0, 0, 1, 1, 1, 1, 2, 2, 2, 2, 3, 3, 3, 3,

5, 5, 5, 5, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4,

4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4, 4

]).buffer),

uv_indices: [

new Binary(new Uint32Array([

0,1,2,3, 4,5,6,7, 0,1,2,3, 8,9,10,11,

5,4,7,6, 12,13,14,15, 16,17,18,19, 20,21,22,23,

24,25,26,27, 9,8,25,12, 15,26,11,10

]).buffer)

]

};

// Create the room geometry from the data and add to the scene

let room_object = Polygon_mesh.create(`${scene_name}_room_obj`, room_mesh);

let room_instance = new Instance(`${scene_name}_room_inst`, true);

room_instance.item = room_object;

rootgroup.attach(room_instance);

// Add a simple diffuse material for the room and assign

let room_material = Mdl_material_instance.create(`${scene_name}_room_mat`,

'mdl::nvidia::core_definitions::diffuse', {

diffuse_color: new RS.Math.Color(0.7, 0.7, 0.7)

}

);

room_instance.set_material(room_material);

After we have made the geometry we need to instance it, then add it to our scenes rootgroup so that it will render. Finally we will also create a very simple diffuse white material and assign that to our room geometry. If you were to render the scene at this point you’d still get a black image (or if you have daylighting on, probably a white one) but that will change shortly.

Ok, just one more trailer before the main feature. Right now the camera is positioned outside our room so that we can fit it all in the frame. However this means we are just looking at the outside wall and can’t see into the room. RealityServer allows you to use Section Objects to cut away the call so you an see into the room without affecting how it is illuminated (you can also optionally let light in the cutout if you wish). Section objects are just like regular scene objects and need to be instanced but their orientation and position will determine how they cut away the scene geometry. Here is the code we need.

// Add section plane to cut away wall

let section = new Section_object(`${scene_name}_section`, true);

section.clip_light = true;

let section_instance = new Instance(`${scene_name}_section_inst`, true);

section_instance.attach(section);

section_instance.matrix = new RS.Math.Matrix4x4([

1, 0, 0, 0,

0, 0, -1, 0,

0, 1, 0, 0,

0, 0, 0.001, 1

]);

rootgroup.attach(section_instance);

The clip_light property shown above tells RealityServer we do not want the cut-away to let extra light into the scene. The transform matrix of the instance of the section plane rotates it and posistions it so that it is just inside the front wall and cuts away everything between it and the camera. Now that the scene is ready we can finally light it up.

For the first light (I’ll show three types) let’s make a photometric light which uses an industry standard IESNA LM-63-02 data file (commonly just called an IES file) to represent the intensity of light output in different directions. For this exercise I have a file from a common low voltage downlight which produces the characteristic double scallop pattern of light if placed close to a wall. There are a few steps involved, first loading the needed MDL module.

// Load the needed MDL materials

Scene.import_elements('${shader}/material_examples/lights_photometric.mdl');

There are many MDL materials which can use photometric light profiles (in MDL the terminology is Light Profile). The above has most of the functionality you would need however you can of course create your own custom MDL materials. In MDL materials and lights are not differentiated, a material for a light is just a material which provides emission. Since we will use a light profile here we need to create this first. Let’s do that along with creating the explicit light source.

// Create the Light and a Lightprofile using an IES file

let ies_light = new Light(`${scene_name}_ies_light`, true);

let ies_profile = new Lightprofile(`${scene_name}_ies_profile`, true);

ies_profile.filename = 'downlight.ies';

The first line here creates a Light element onto which we will set properties for its emitting material as well as values which determine its visibility and whether it is an area source or not. Note that rather than creating an explicit light source you can also just assign an emissive MDL material to any geometry you like, however explicit lights are generally faster to render.

Above we also create the Lightprofile object. This handled in a similar way to a texture but instead of pixels the light profile (loaded from the .ies file) contains angles and intensities. After we create the Lightprofile we set its filename property to point to the .ies file we want to load. RealityServer will search your resource paths in the same way that it searches for textures to find the file. You can also load them directly from your content_root directory. Now we need a material.

// Create an emissive material using the light profile and set on the light

let ies_material = Mdl_material_instance.create(`${scene_name}_ies_mat`,

'mdl::material_examples::lights_photometric::ies_light', {

flux: 1200,

profile: ies_profile

}

);

ies_light.material = ies_material;

This is very similar to the way we made the material for the room, just with a different definition and parameters. We set a flux of 1200 lumens which represents the intensity of our light source and give our Lightprofile object to the profile argument for the material to use. Then the material property on the light is set to our new material. To actually use the light we need to create an instance of it and add it to our scene.

// Instance the light and transform it into place

let ies_instance = new Instance(`${scene_name}_ies_inst`, true);

ies_instance.item = ies_light;

ies_instance.matrix = new RS.Math.Matrix4x4([

1.0, 0.0, 0.0, 0.0,

0.0, 1.0, 0.0, 0.0,

0.0, 0.0, 1.0, 0.0,

-2.0, -4.75, -2.99, 1.0

]);

rootgroup.attach(ies_instance);

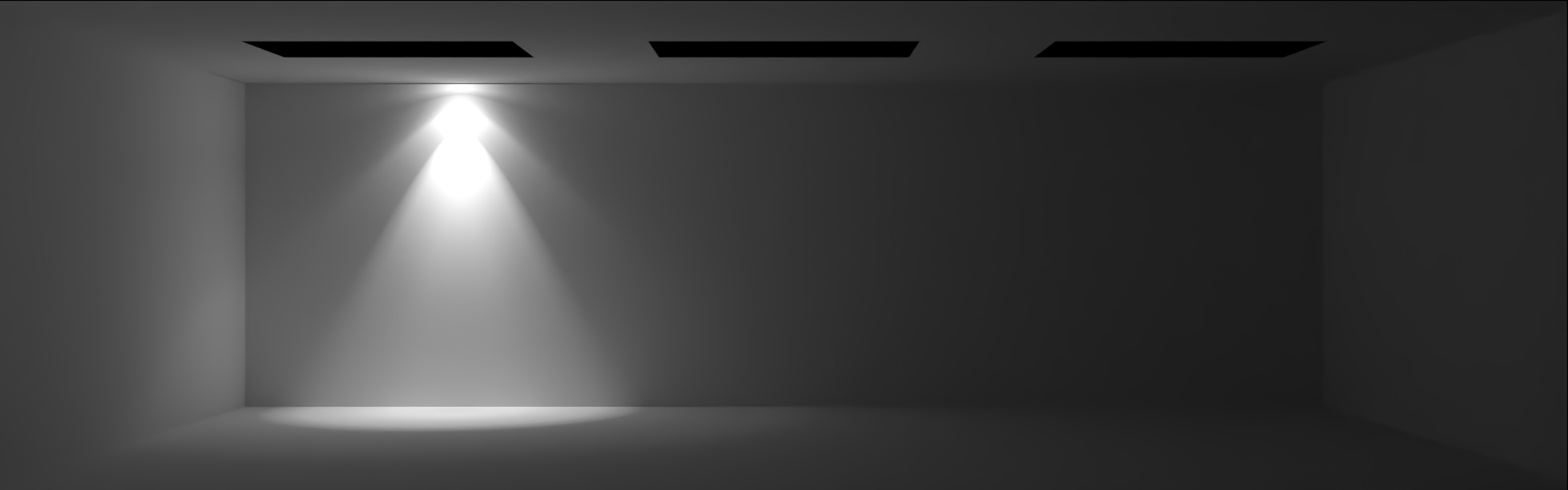

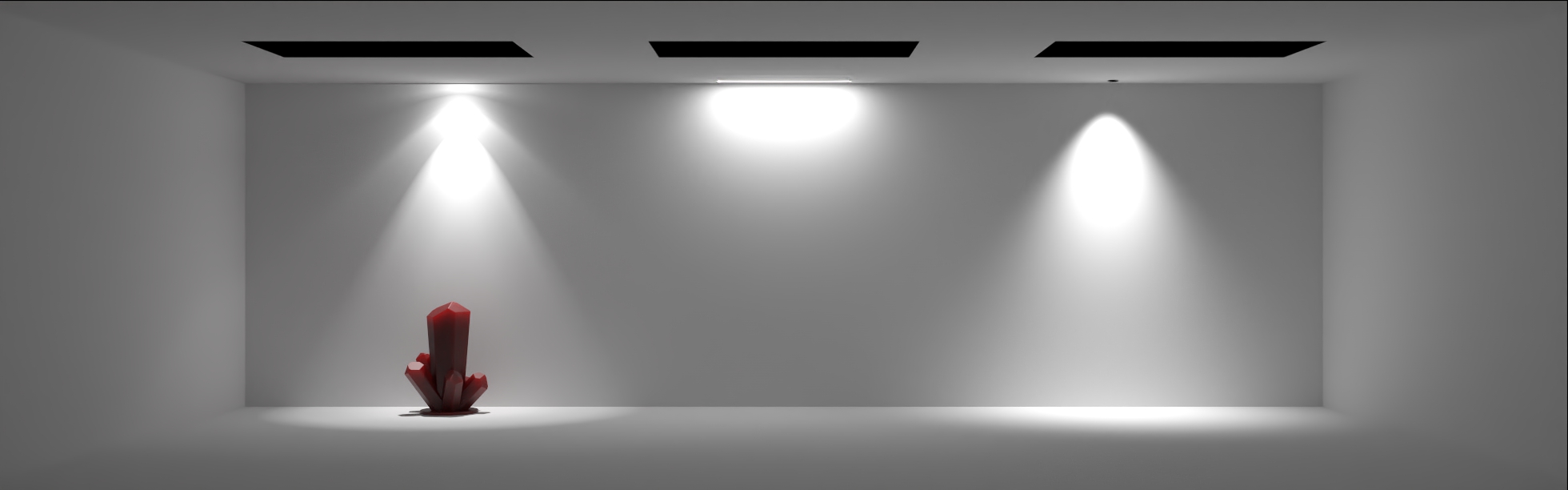

This is done the same way as any other object. Here a transformation matrix is set directly which positions the light on the ceiling to the back left of our scene. At this stage we can actually finally render something. If you call your new command and then render the resulting scene you should get something similar to this (if daylight is false).

In this case we created a point light which has no area and so any shadows from this light will be extremely sharp. In general it’s best to use area lights where appropriate to get a more natural shadowing. The next two lights will use this feature.

For the second light I’ll show you how to create an area source. These light sources emit light from an area shape rather than a single point. The shapes available are sphere, rectangle, disc and cylinder. Area lights give softer, more realistic shadows and you can choose to have the emitting surface either visible or not (although they will always be visible in secondary bounces such as reflections and refractions). The code to make the area light is only slightly different.

// Create the Light and set its area parameters

let rect_light = new Light(`${scene_name}_rect_light`, true);

rect_light.area_shape = 'rectangle';

rect_light.area_size = new RS.Math.Vector2(1.2, 0.01);

// Make the light visible so we can see the emissive area

rect_light.attributes.set('visible', true, 'Boolean');

// Create an emissive material and set on the light

let rect_material = Mdl_material_instance.create(`${scene_name}_rect_mat`,

'mdl::material_examples::lights_photometric::diffuse_area_light', {

flux: 1200

}

);

rect_light.material = rect_material;

// Instance the light and transform it into place

let rect_instance = new Instance(`${scene_name}_rect_inst`, true);

rect_instance.item = rect_light;

rect_instance.matrix = new RS.Math.Matrix4x4([

1.0, 0.0, 0.0, 0.0,

0.0, 1.0, 0.0, 0.0,

0.0, 0.0, 1.0, 0.0,

-5.0, -4.75, -2.99, 1.0

]);

rootgroup.attach(rect_instance);

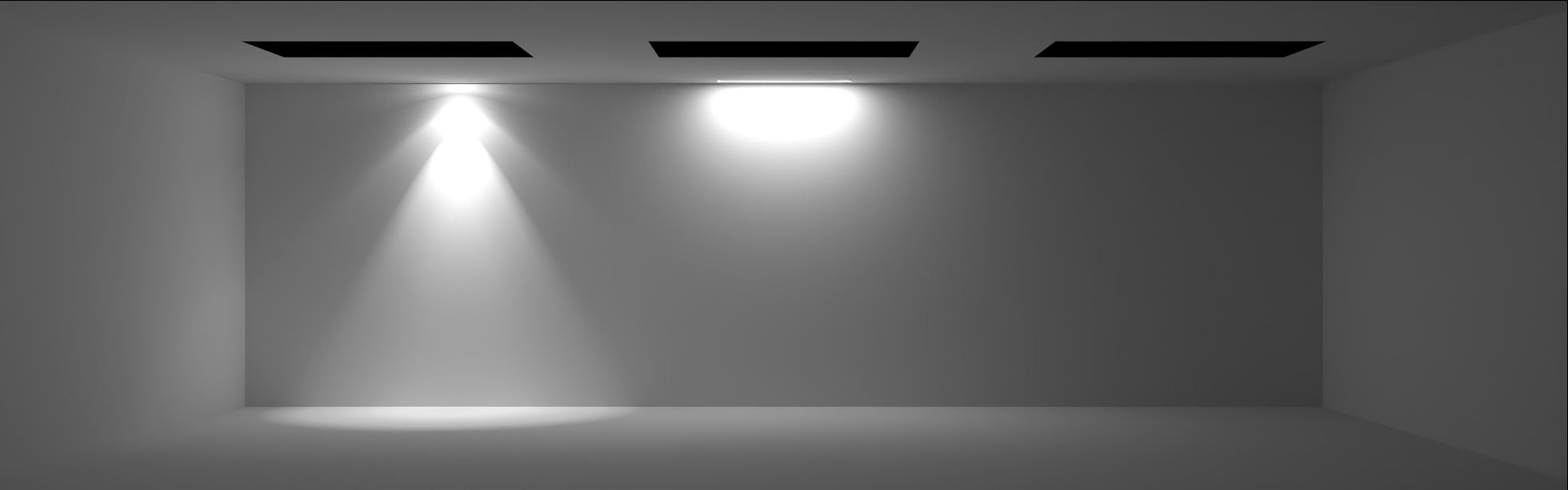

I won’t break it down light the previous light since there are not too many differences. Firstly note that we set the two properties which make this an area light source, area_shape and area_size. These are set on the light itself. For the rectangle area shape the area size is a two component vector specifying the length and width. Also I set the visible attribute to true so that we can see the area source, by default visible is not set. Instead of an IES style light, for this one I’ve used a diffuse area light which emits the same amount of light in all directions (modified only by the standard cosine factor). A matrix to put it in the middle position in our scene is given and then we get something like this when rendering.

You can see the emission takes place over the full area we defined and you can see the visible source as the white rectangle on the ceiling. A little latter we will see the effect of the light on shadowing in the scene once we put some objects in.

For the last light I’ll use a disc shaped area source and use a spot light MDL definition which provides a basic cone shaped distribution. Again the code is quite similar.

// Create the Light and set its area parameters

let spot_light = new Light(`${scene_name}_spot_light`, true);

spot_light.area_shape = 'disc';

spot_light.area_radius = 0.05;

// Make the light visible so we can see the emissive area

spot_light.attributes.set('visible', true, 'Boolean');

// Create an emissive material and set on the light

let spot_material = Mdl_material_instance.create(`${scene_name}_spot_mat`,

'mdl::material_examples::lights_photometric::spot_light', {

flux: 1500,

spot_exponent: 2.5,

spot_spread_angle: 1.483530

}

);

spot_light.material = spot_material;

// Instance the light and transform it into place

let spot_instance = new Instance(`${scene_name}_spot_inst`, true);

spot_instance.item = spot_light;

spot_instance.matrix = new RS.Math.Matrix4x4([

1.0, 0.0, 0.0, 0.0,

0.0, 1.0, 0.0, 0.0,

0.0, 0.0, 1.0, 0.0,

-8.0, -4.75, -2.99, 1.0

]);

rootgroup.attach(spot_instance);

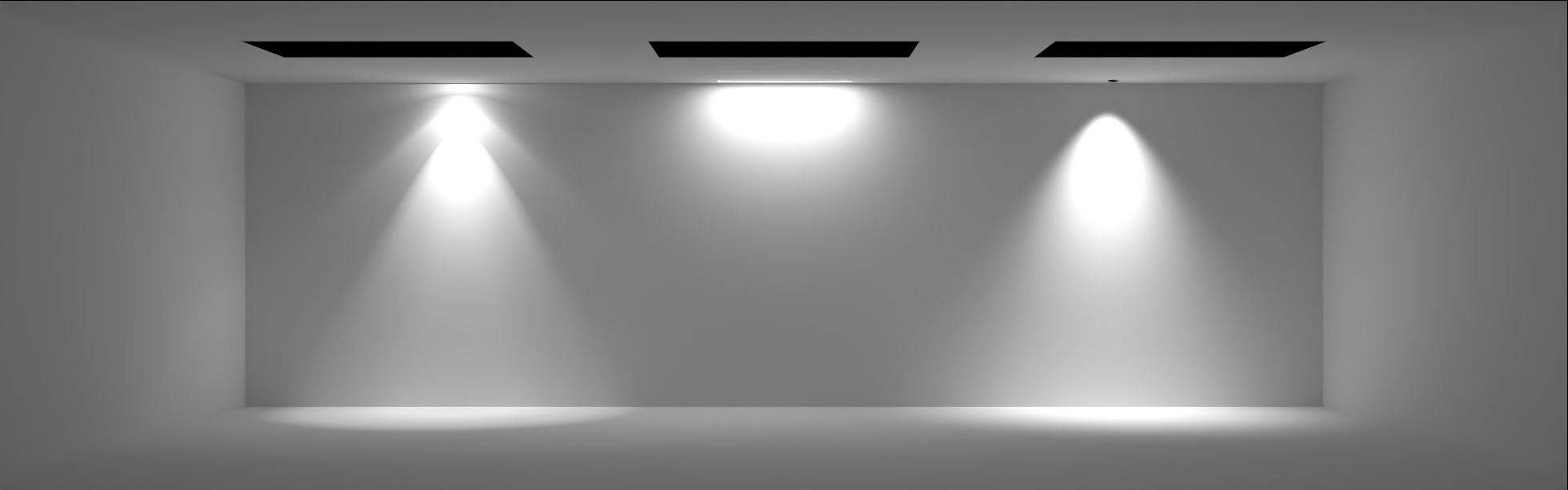

So when setting the area_shape property to disc, we have to set a different property to control its size, area_radius. This is also used for the sphere shape. This light has been made visible as well but you can also see the MDL definition now uses the spot_light material. This takes an exponent and spread angle (in radians) which controls the size of the cone into which light is emitted as well as how the intensity falls off as you move from a direction directly below the light to further out. The matrix positions this light on the right side and rendering gives us an image like this.

The reader is encouraged to experiment with the settings for the lights and see how it affects the output. You might note in the image above the spot light area source appears black. This is an unfortunately consequence of the way in which Iray handles the direct visibility of area lights. It will show the camera the intensity of the light emitted from the source a that angle. In this case we are at a steep enough angle that the camera is outside the cutoff cone so we don’t see any emission. If you were to move the camera below the light and look up you would then see it. There is no solution for this which retains full physical accuracy however you can add a much dimmer uniform emission to your lights MDL material to get around this, however that will add slightly more light to the scene.

To make the scene a bit more interesting and add something to cast shadows let’s import an object and place some copies of it around the room. Obviously this is not strictly related to how to add lighting so you could stop at this point but read on if you are not already familiar with how to insert extra objects into your scene. Firstly we need to import the object. I’ll use the crystal.mi file which ships with RealityServer so we know it’s there.

// Import the scene elments for the crystal model and grab the rootgroup

let import_results = Scene.import_elements('scenes/crystal.mi', {

import_options: {

prefix: 'crystal::'

}

});

let crystal_group = new Group(import_results.rootgroup);

The static import_elements method on the Scene helper class loads the contents of the file but does not create a scene around it. The function returns the result of the import which will include the rootgroup property which has the name of the group which contains everything in the imported file. If you set the import option list_elements to true you’ll also get a list of everything that was imported.

In our case we just want to use everything in the file so we take the rootgroup and make a new instance of a Group to wrap the existing group. Since we do not pass true as the second parameter to the constructor this looks for an existing group in the databsae with that name and doesn’t create a new one. Next we want to instance and position our new object.

// Create an instance of the crystal and set its transformation

let crystal_1_instance = new Instance(`${scene_name}_crystal_1_inst`, true);

crystal_1_instance.item = crystal_group;

crystal_1_rotation = new RS.Math.Matrix4x4();

crystal_1_rotation.set_rotation(new RS.Math.Vector4(1,0,0,1), -90.0 * (Math.PI / 180.0));

crystal_1_translation = new RS.Math.Matrix4x4();

crystal_1_translation.set_translation(-2.0, 0.0, 4.5);

crystal_1_scale = new RS.Math.Matrix4x4();

crystal_1_scale.set_scaling(0.2, 0.2, 0.2);

// Combine rotation, translation and scale matrices through multiplication

crystal_1_instance.matrix = crystal_1_rotation.multiply(

crystal_1_translation.multiply(crystal_1_scale));

I’ve numbered our instance here because we’ll make three of them. First we make a new instance and then set the group we made from our imported file as the item of that instance. Now I’ve done the transformation matrix a little differently for this one and if you want to combine translation, rotation and scaling this makes life much simpler.

Three matrices are created, one each for translation, rotation and scaling and we then multiply them together in the correct order to obtain the full transformation matrix combining all of these individual transformations. The set_translation, set_rotation and set_scaling methods of the RS.Math.Matrix4x4 class are used for this. Note that these methods take the inverse of the values you want to use, so for example our scale factor of 0.2, 0.2, 0.2 will make the object 5 times larger. For a much more detailed overview of how transformation matrices are constructed refer to our 3D Transformations article.

We could render the scene now, however before doing so let’s change the material being used on the imported object.

// Make a new material and override the existing one

let crystal_1_material = Mdl_material_instance.create(`${scene_name}_crystal_1_mat`,

'mdl::nvidia::core_definitions::thick_translucent', {

transmission_color: new RS.Math.Color(0.891, 0.0303, 0.0303),

volume_color: new RS.Math.Color(0.133, 0.0119, 0),

volume_scattering: 0.98,

roughness: 0.3,

reflectivity: 1.0,

base_thickness: 0.25,

ior: 1.61

}

);

crystal_1_instance.set_material(crystal_1_material, true);

Once again we create a new MDL material, this time using the thick_translucent material from the NVIDIA core_definitions module. The regular crystal model is blue so here we’ve made it red instead. The last line sets the material on the instance but with an important distinction, we set the second parameter to true. This tells RealityServer we want to override any materials lower down in the scene graph with the one we have assigned. This means the materials already assigned to the objects in the scene will be ignored and replaced with the one we just provided. Finally, don’t forget to actually attach our new creation to the scene.

// Attach our instance to the rootgroup

rootgroup.attach(crystal_1_instance);

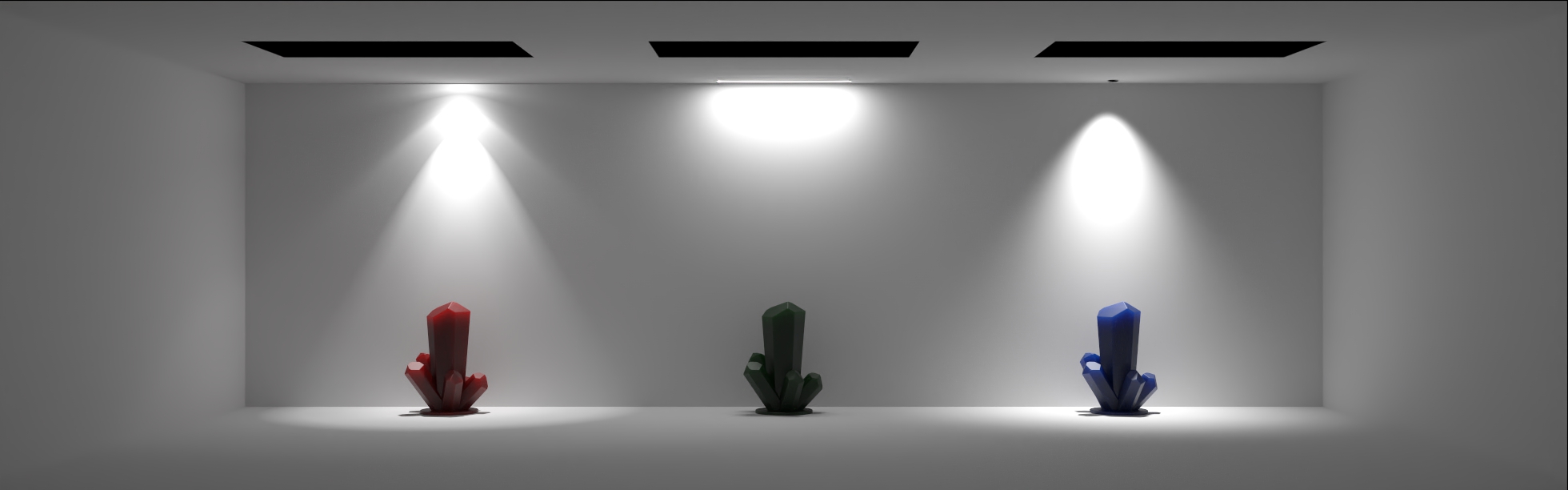

Now when rendering you should get something similar to this.

Repeat the process a couple more time with different parameters for the material and transformations and you should now have a result similar to that shown at the start of the article.

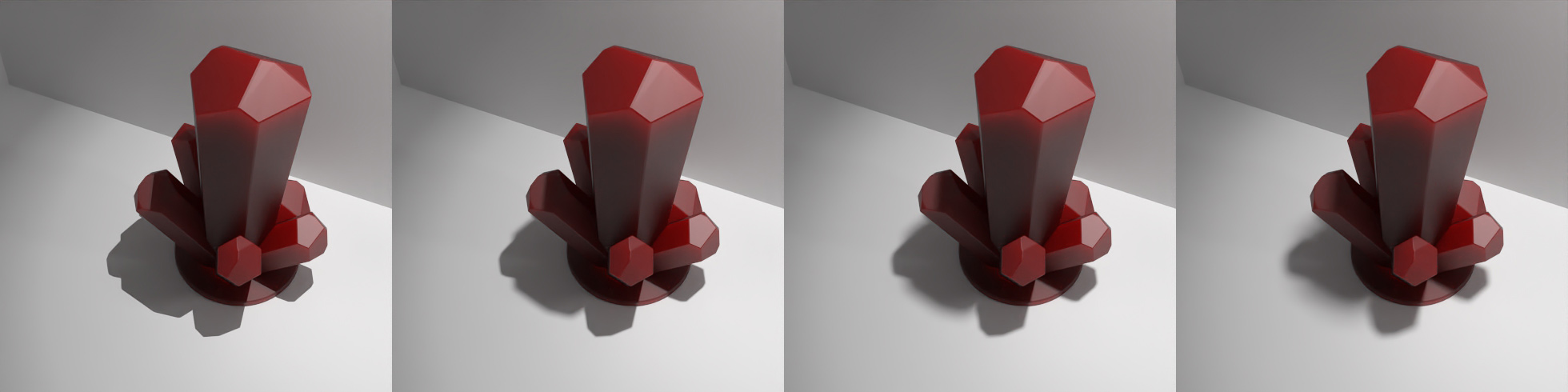

The effect of the size of the area source on shadowing was mentioned earlier. In the following image you can see this effect more directly. On the left we have a point source then as we move from left to right a disc area source with a radius of 0.05, 0.1 and 0.2 respectively. Note how the shadows become software as we increase the size of the source while the contact shadows remain sharp.

To control the rendering process we need to set some termination conditions to tell RealityServer when to stop rendering. In our case we will use the number of iterations. Since we already have the options for the scene in an instance of the Options class we can just set attributes on it.

// Set termination conditions

options.attributes.set('progressive_rendering_max_samples', 250, 'Sint32');

options.attributes.set('progressive_rendering_quality_enabled', false, 'Boolean');

This sets the maximum number of iterations to 250 and disables the quality termination condition which is enabled by default. More samples will give a cleaner result with less noise, however even with a relatively low number of samples we can get a nice result by enabling the AI denoiser.

// Turn on AI denoising

options.attributes.set('post_denoiser_available', true, 'Boolean');

options.attributes.set('post_denoiser_enabled', true, 'Boolean');

options.attributes.set('post_denoiser_start_iteration', 250, 'Sint32');

We set the start iteration so that the denoiser only turns on at the end of rendering, otherwise intermediate frames would also be denoised (if not running using the batch scheduler).

The command was created with a daylight parameter, so if we set that to true we can render the same scene with daylight turned on. For all of the rendering I have been doing earlier I made a simple JSON-RPC request set and used Postman to make the requests. If you are not already familiar with how to call RealityServer commands see the article on Exploring the JSON-RPC API. Here is my request set.

[

{"jsonrpc": "2.0", "method": "create_scope", "params": {

"scope_name": "tutorial_scope"

}, "id": 1},

{"jsonrpc": "2.0", "method": "use_scope", "params": {

"scope_name": "tutorial_scope"

}, "id": 2},

{"jsonrpc": "2.0", "method": "tutorial_lights", "params": {

"scene_name": "tutorial_scene",

"daylight": true

}, "id": 3},

{"jsonrpc": "2.0", "method": "render", "params": {

"scene_name": "tutorial_scene",

"render_context_options": {

"scheduler_mode": {

"type": "String",

"value": "batch"

}

}

}, "id": 4},

{"jsonrpc": "2.0", "method": "delete_scope", "params": {

"scope_name": "tutorial_scope"

}, "id": 5}

]

This one just makes a scope, runs our custom tutorial_lights command and then renders the scene it produces, deleting the scope at the end. Using the above, which is turning the daylight on we get a result like this.

We’ve covered a lot of ground in this article and while the focus was on creating lights, there were also a lot of other common tasks covered. If you would like the completed command file you can download it below. Of course we’d love to hear about what your doing with RealityServer and how you are using dynamic lighting in your applications so get in touch.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.