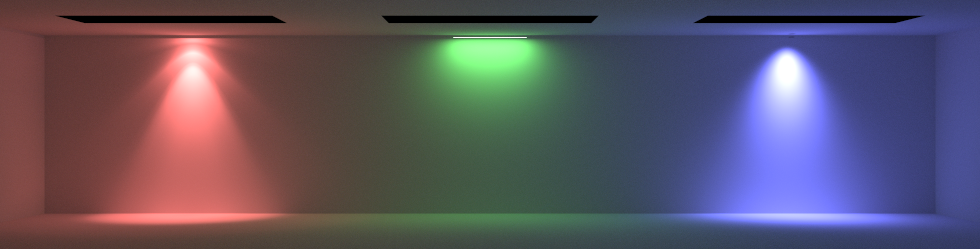

In this article I am going to show you how add light sources to your RealityServer scene using the V8 server-side JavaScript API. You will learn how to add several different types of lights, including a photometric light using an IES data file, an area light, a spot light and daylight. This will be a very simple example but will give you all of the pieces you need to programmatically add lighting to your scene. You can expand on the concepts shown here to make different types of lighting very easily.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

We’ve had quite a few customers express interest in downloading content from AWS S3 rather than persistently storing it on their RealityServer instances. While this introduces latency, in many use cases it can still make a lot of sense. Our recently released HTTP Request functionality for V8 makes it easy to download content from public URLs. What do you do if your S3 buckets require authentication though? Let’s dive in.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

Even though RealityServer is great at streaming fully interactive, server-side rendering directly to your browser, not every use case requires this level of interactivity. RealityServer has recently introduced a new feature called the Queue Manager which integrates with popular message queue services to manage rendering and other RealityServer tasks. In this article we will dive into the details on how to get up and running with this great new feature using Amazon SQS and S3 services.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

RealityServer is a platform for integrating photorealistic 3D rendering into your application. It is based on a web services methodology and can be used both for rendering automation and fully interactive rendering. In this introduction we will cover the core concepts of RealityServer development and what you need to understand in order to get started.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

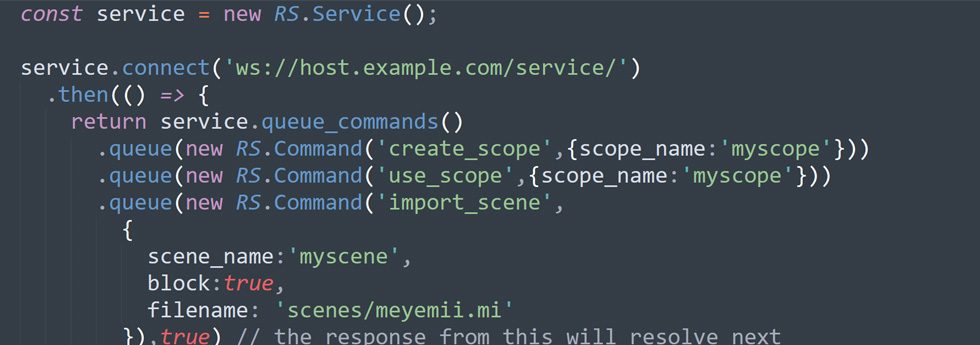

The legacy JavaScript client library shipped with RealityServer was designed in 2010, back when JavaScript in the browser was mostly unusable without some sort of framework like jQuery, WebSockets were still under design and JavaScript on the server was virtually unheard of. A lot has changed since then, JavaScript has significantly evolved and is supported by a huge module ecosystem, WebSockets are under the hood of everything and Node.js is becoming ubiquitous. The RealityServer client library however has barely changed. Until now.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

So you’ve got your RealityServer release email and downloaded everything but how do you install it? This article will take you through the steps in setting up RealityServer on Windows or Linux as well and also provide an overview of the RealityServer directory structure. We’ll also cover how to setup your licensing.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

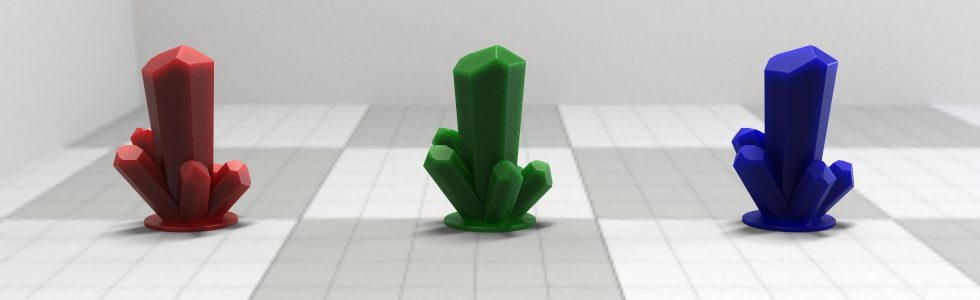

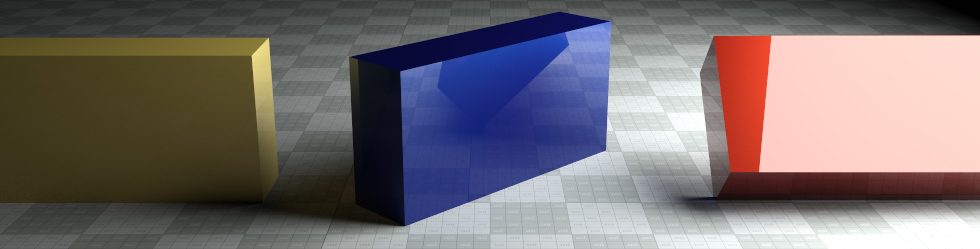

Continuing our series of articles on using the server-side V8 API we will now add some objects, loaded from a model file to the scene that we setup and lit in our previous articles. This one is going to be very simple but for bonus points we’ll add multiple copies of the object in different locations.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

In a previous post we covered creating an empty scene by writing a server-side V8 command in JavaScript. Now let’s turn on some light by adding an environment lighting setup to our empty scene. We’ll create two new commands, one to add lighting based on a spherical HDRI image and another using the built in physically based sun and sky system.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

We’ve covered server-side V8 commands before but in this post we will go into a little more detail and use some of the helper classes that are provided with RealityServer to make common tasks easier. Quite often you want to kick off an application by creating a valid, empty scene ready for adding your content. Actually, it’s something we we need to do in a lot of our posts here so to avoid repeating it each time, lets make a V8 command to do it for us.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

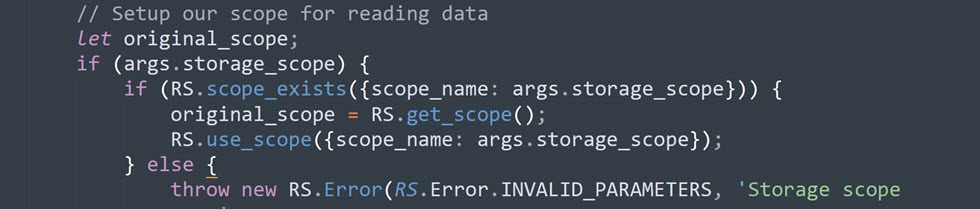

A core concept in RealityServer which many new users have some difficulty understanding is Scopes. The use of scopes is critical in making effective use of RealityServer in a production environment where multiple users or multiple independent operations are happening at once. In this article we will go into more depth on what scopes are and how to use them.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

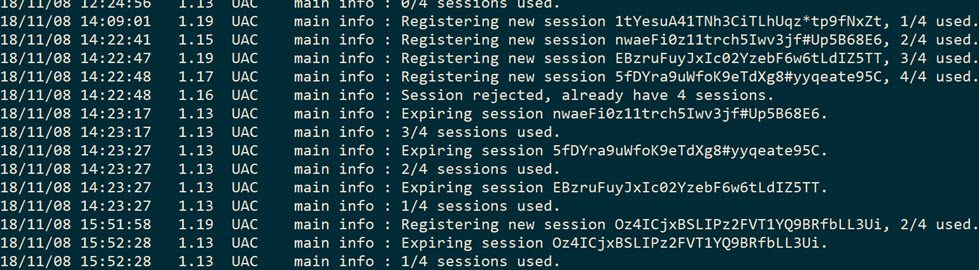

In this article we’ll take a quick look at how to use the UAC system in RealityServer to effectively manage user sessions and clean up server memory when users go away. There won’t be a lot of pretty pictures (well there is one if you make it to the end) but for those of you getting your hands dirty with RealityServer in production, you’ll get some valuable pointers to help keep your server from filling up with unused data.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

In our last post we explored using the RealityServer compositing system to produce imagery for product configurators at scale. Check out that article first if you haven’t already as it contains a great introduction to how the system works. In this follow up post we will explore the possibilities of using the same system to modify the lighting in a scene without having to re-render, allowing us to build a lighting configurator.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

RealityServer 5.1 introduced a new way to generate images of configurations of your scenes without the need to re-render them from scratch. We call this Compositing even though it’s actually very different to traditional compositing techniques. In this article we will dive into the detail of how to use the new system to render without rendering and speed up your configurator.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

RealityServer 5.1 introduced new functionality for working with canvases in V8. In this post I’m going to show you how to do some basic things like resizing and accessing individual canvas pixels. We’ll build a fun little command to render a scene and process the result into a piece of interactive ASCII art. Of course, this doesn’t have much practical utility but it’s a great way to learn about this new feature!

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

A lot of new customers ask us where they can run RealityServer since they don’t have their own server or workstation with NVIDIA GPU hardware available. Starting up RealityServer on Nimbix is covered in another article where everything is pre-configured for you, on AWS however you need to do a bit more setup yourself. We are assuming here that you are already familiar with Amazon Web Services and starting instances on Amazon EC2, along with basic concepts like security groups. We won’t cover the basics of how to start an instance here however there is lots of good information about that online, including this guide from Amazon. So, let’s get started.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

The number of cloud service providers offering NVIDIA GPU resources is increasing and in today’s article we will show you how to get started using RealityServer with Nimbix. migenius has deployed several of its customer projects on the Nimbix platform and it offers some unique advantages such as containerised environments (instead of virtualisation), fast start-up times and usage charged by the minute instead of by the hour. On Nimbix migenius has set-up a pre-configured RealityServer environment for you, keep reading to learn how to sign up for Nimbix services and get RealityServer up and running. Read More

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

In this, the second part of our article on transformations I will introduce SRT (Scaling, Rotation, Translation) transformations. Unlike the previous article, this one will have a lot less maths and shows you a simpler way to work with transformations in RealityServer. Additionally the method allows for automatic interpolation of transformations over time in a smooth way which is great for creating animations. Once things are moving you can also introduce motion blur for more realistic results. Read on to discover the ease of SRT transformations.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

Transformations are fundamental to working with 3D scenes and something that can be frequently confusing to those that haven’t worked in 3D before. In this, the first of two articles I will show you how to encode 3D transformations as a single 4×4 matrix which you can then pass into the appropriate RealityServer command to position, orient and scale objects in your scene. In a second part I will dive into a newer method of specifying transformations in RealityServer called SRT transformations which also allows for the easy animation of objects.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

In this article I am going to show you how add light sources to your RealityServer scene using the Web-services API. You will learn how to add several different types of lights, including a photometric light using an IES data file, an area light, a spot light and daylight. This will be a very simple example but will give you all of the pieces you need to programmatically add lighting to your scene. You can expand on the concepts shown here to make different types of lighting very easily.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

RealityServer 4.4 build 1527.46 has just been released adding Iray 2016.1.1 which includes support for rendering stereo, spherical VR imagery suitable for viewing with devices such as the Oculus Rift, HTC Vive, Samsung GearVR, OSVR and Google Cardboard viewers. There are also numerous small additions and bug fixes and some other new features such as spectral rendering, however VR rendering is the headline item. In this article we will show you how to do simple VR rendering with RealityServer.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

In this article I am going to show you how to create a simple 3D scene, completely from scratch using RealityServer. You will learn about the anatomy of a RealityServer scene and the different components that go into making it up, including options, groups, instances, cameras, geometry and environment lighting. While the scene will be very simple there will be many key principles of RealityServer and NVIDIA Iray demonstrated which you can expand on to build more complex scenes.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

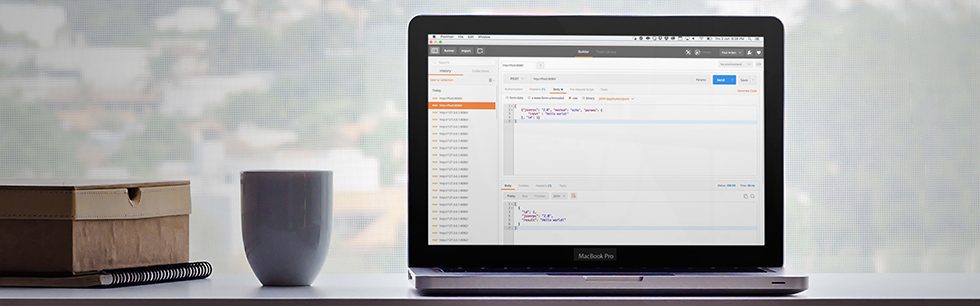

When getting started with RealityServer, many customers ask us the best place to begin in order to learn how RealityServer works. One of the best and most enjoyable ways we find is to explore the JSON-RPC API which remains the main way that RealityServer functionality is accessed. In this article we will provide an overview of how the RealityServer JSON-RPC API works and some of the best ways to explore and play with functionality exposed there. Whether you are new to RealityServer or a veteran user you will find some valuable pointers.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

It’s MDL Monday again and this week I am going to show you how to put together a simple material for simulating diamonds, including dispersion based on an Abbe number. Now, I’m not a 3D artist by any means, but MDL allows me to create a material like this based on the real physical properties of diamonds rather than trying to tune abstract parameters. This material is very simple but very useful if you need to simulate jewellery. You can build on it easily to simulate other gemstones, glasses and similar substances without much effort. Today I’m just going to start with a basic, colourless diamond and cover some concepts which are important for creating physical materials.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

NVIDIA Iray 2015 will introduce some great new features, including the ability to write your own procedural functions for use in materials. This is fantastic for creating resolution independent effects which can cover large areas without noticeable tiling artifacts (unless you want them of course). Iray is built into our RealityServer product so I love to test out its latest features. To put procedural functions through their paces I decided to try to emulate something procedural from my childhood, the now famous 10 PRINT program. This little one liner, originally designed to demonstrate the capabilities of the Commodore 64 prints a maze by randomly alternating between two diagonal characters. Iray uses the NVIDIA Material Definition Language (MDL) both to define materials as well as custom functions, if you haven’t tried it out this little tutorial is a great way to get started.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.

It sounds like such a simple question and it is asked by almost every new customer we engage. In reality, answering this can get complex quickly, however there are a few basic ways to get some quick estimates. What we are really talking about here is Capacity Planning. As a more general reference on the subject of planning for scalable websites you could take a look at many of the books out there, one now older but still great reference is Cal Henderson’s Building Scalable Websites.

Before setting out we should clarify that at migenius we are typically working with websites and applications with very different compute requirements to your average website. Normally servers are used for serving pages, database operations, perhaps running search engines and other tasks that would occupy only a small portion of any servers resources when servicing a single users requests. Read More

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.